Trustworthiness in the Age of AI

It probably feels liberating to be a little bit wrong, all of the time.

Computers are, fundamentally, excellent calculators. Nearly every bit of value creation by computing since the dawn of the computing age is traceable to this fact. The information revolution was built on the ability to calculate faster, more comprehensively, and more reliably. Our most fundamental understanding of a computer is as “The Calculator.”

I treat it as given that, when I ask a computer to crunch the numbers, it will do so reliably. Two plus two equals four, whether I ask a computer to calculate it once or five billion times. Excepting errors caused by bugs in the software, computers so rarely get the answers to well-formed questions wrong that we could even blame the stars when they do.

As we entered the age of Big Data our understanding of computers’ infallibility changed. The questions we asked of computers, and the ways in which they answered them, became probabilistic and error-prone.

If I ask a computer to calculate the mean, variance, skew, and kurtosis of a statistical distribution of Spotify listening data about Madonna, it will answer with the same infallibility with which we have grown comfortable. Barring a bug in the code, or a cosmic ray to the right bits, it will give me the correct values of the distribution over that data set as long as the data set and the analysis stay fixed.

If I then ask the computer to extrapolate from that distribution and infer whether or not a listener will like Madonna, it is going to go out on a limb. It is no longer calculating some function over a distribution, it is estimating a real-world phenomenon of which it has an incomplete picture. Its success will be subjective, driven by a number of factors:

- As the writer of The Algorithm, am I knowledgable and skillful? Do I understand the algorithms I am writing? Do I understand the domain to which I am applying them?

- What are the qualities of the data I provide to the algorithm? Is it representative and comprehensive? Is it clean and accurate?

- How does the listener experience music? What brings them joy? What do they find annoying? How do they connect with what they hear?

It is not a simple task to estimate whether someone will like Madonna, no matter how strong her discography is. Every person is different and no pile of data is big enough to truly understand someone’s taste in music - so how can we truly know if they’ll like Madonna? The Algorithm will do its job, it will guess, it may guess well, but sometimes it will get it wrong.

It is exactly this challenge that attracts me to work in Recommender Systems specifically - understanding people via data and algorithms is sisyphean, fascinating, emotional, human, and deeply enjoyable.

On the other hand, this fallibility is why we think of “The Algorithm” differently than how we think of computers’ other behaviors as The Calculator. We discuss how “smart” an algorithm is, or express confusion for the mistakes that it makes. When an algorithm seems to generally understand us, we are inclined to forgive it when, later, it does not. We ascribe intent to “The Algorithm” as a proxy for the intent of its creators - were they skilled and knowledgable? Under what incentives were they working? What resources did they have at their disposal?

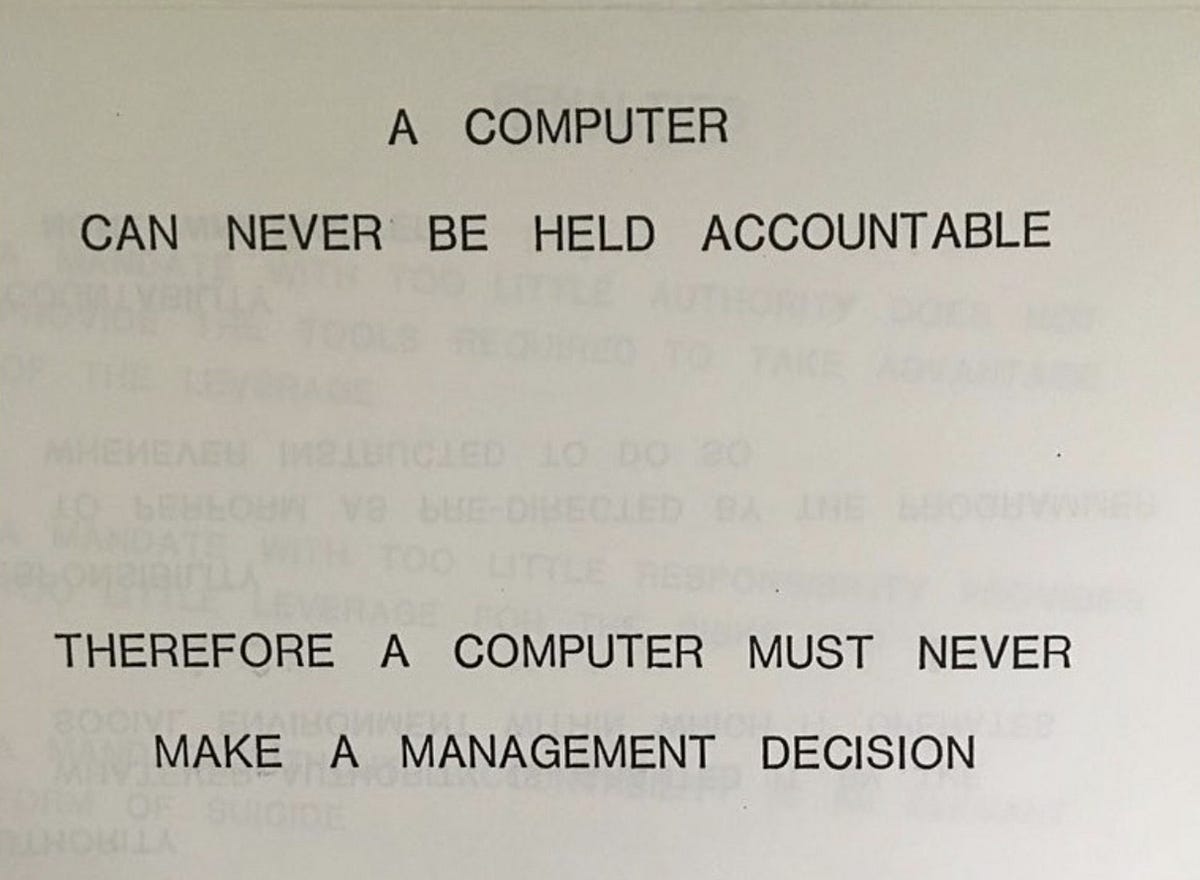

Within an organization, we see this same proxying-of-trust in how data analysts are perceived. Executives trust their data analysts to give them insight. If the executive decides to trust the insights they’re given, it is not because they trust the computer that crunched the data - it is because they trust the individuals that performed the analysis. The difference between an executive trusting a data analyst’s analysis versus a Spotify listener trusting The Algorithm’s recommendations is that the executive actually knows who they are trusting - and can fire them.

The era of Big Data asked us to trust The Algorithm, though we were actually estimating whether its creators were trustworthy. At the same time, we became accustomed to computers being a little bit wrong, all of the time.

Then AI arrived, and we were quick to trust it. Large Language Models (LLMs) like ChatGPT had an authoritative and helpful tone, quickly ingratiating it to our more-credulous friends, bosses, and family members.

Interacting with ChatGPT felt different from interacting with a Spotify playlist or a Google Search. ChatGPT felt like it had real intent, real knowledge, and a real desire to assist you.

Under the hood, though, these LLMs do not hold “knowledge” the way computers have in the past.

Google references an indexed database of the internet’s contents. Google answers your questions by surfacing those contents. You, as the user, then decide whether to trust those contents and the person who wrote them.

LLMs only hold their “knowledge” in the weft and wend of the weights and probabilities inside their minds. When asked, an LLM may regurgitate the facts that were contained in its training data, or it may regurgitate something entirely false that sounds just like those facts. Knowledge, for LLMs, is inconcrete.

There are techniques being applied (Retrieval-augmented Generation, Chain-of-Thought, etc) to make LLMs more concrete, more self-aware, more grounded in facts, more computer-like, but they don’t bring LLMs to the tier of infallibility that computers have traditionally occupied. Computers are excellent calculators, and we keep trying to use LLMs as calculators, but they fall short.

As we interact with LLMs we often don’t discern their errors, we experience repeated Gell-Mann Amnesia, or we conclude that they just don’t know what they’re talking about no matter how confident they sound. Many who use LLMs regularly, but lack either subject-matter knowledge to second-guess them or technical knowledge on how they work, view LLMs as either The Calculator (methodical, implicitly trustworthy) or The Algorithm (fallible, proxying trust to its creators) when LLMs are simply something new: The AI.

LLMs are neither methodical nor calculating, despite being computers at their core - they are fallible, noisy, and powered by emergent behavior. LLMs are influenced by their creators’ intent, but in any given interaction an LLM’s behavior can quickly transcend the intent of its creator to behave in ways that are undesirable or, at least, unpredictable.

At the same time, the machine is talking to us, explaining itself (even when wrong) in a way that only real human beings have in the past.

While we get accustomed to them, LLMs are are poised to create enormous value: They are being used to solve problems that computers have not solved in the past. They can process the mess of real-world data, and can even be encapsulated to take action and exert agency without human intervention.

If I say a person is “untrustworthy” I am usually saying that they are deceptive or opaque - but if I say a barstool is “untrustworthy” I am saying that a leg is loose and it is going to break if you sit on it. I attribute the barstool no intent, no malice, no deception - but I’d still better tighten the bolts if I’m going to sit on it.

The Calculator and The Algorithm are more like barstools than people. They are not deceptive or opaque, they are tools. They are reliable, but they are not completely infallible. We do not “trust” them, we use them.

The AI, unlike The Calculator and The Algorithm, seems to be more like a person. It can be deceptive because it was told to be or does not know any better. It is opaque because its behavior is emergent and it defies introspection. It can be wrong because the way it reasons and stores information is inconcrete.

A TI-84 calculator doesn’t ask for our trust, Google asks us to trust the people who wrote the websites it surfaces, a data dashboard asks us to trust the data analyst, but ChatGPT asks us to trust ChatGPT.

If you’ve been building machine learning-powered products you have probably grown accustomed to explaining to people that The Algorithm will get some of its answers wrong.

For a decade I’ve been building ML systems and products under both the typical headwinds and tailwinds of software engineering in industry. I have to ship quickly: being quick to market and quicker to iterate gives our products a competitive edge. I get to ship quickly: I work within tooling, process, and culture that allow me to try, try, and try again until I make material improvements.

I tolerate the times when we engineers get it wrong because that is how we learn. I tolerate the times when the software I write is wrong because I can correct it. I am especially tolerant when The Algorithm I’ve created gets something wrong because I can make it smarter. I know it is not perfect, and sometimes its mistakes are so subtle I just don’t notice.

As I built, I asked bosses to trust that The Algorithm would get smarter as we iterate on it and, because we did, they became exceptionally valuable. I asked customers, directly or implicitly, to trust that sometimes their results would be a bit off but, if they came along for the ride with me, they would be understood, reflected, and benefitted by The Algorithm I made.

The arrival of AI flipped the script. Now, more often than not, I find myself explaining to colleagues, to bosses, to investors, and to customers that the AI actually is wrong even while it sounds right. I work to make these ML- and AI-powered products better while under pressure to expand the areas to which AI is applied beyond what it is capable of. At the same time, I generally lack the ability to identify the edges of that capability.

We are experiencing concurrent phenomena that, combined, create a total collapse in the model of trust between computers, engineers, businesses, AI, and the rest of us:

- Software engineers are tolerant of failure and benefit from moving quickly.

- All Machine Learning systems, especially LLMs, are error prone and the errors can be subtle.

- LLMs are being applied to categories of problems that computers have not solved in the past.

- LLM applications are outpacing our ability to evaluate, validate, and correct them.

- LLMs behave in a way that demands trust, rather than proxying trust to its creators.

- LLMs are remarkably convincing.

If I am building AI systems, which already stretch our trust in computers, but I am unable to discern what its limits are and when it is wrong, then how can I ask others to trust it? How can I ask others to trust me?

This isn’t a matter of a guilty conscience, it is about recognizing that the things I am building are neither The Calculator nor The Algorithm. They are something new, something with the ability to deceive, and something that demands a new model of trust.

Still, we must remember that AI, despite its qualities, is closer to a barstool than a person. It is a tool, wielded by many hands. We engineers who build them are the real people, and we should seek to be trustworthy.

I ask myself if I’ve earned that trust:

Do I understand how AI works? What properties of it are reliable, and how reliable are they?

Why am I using AI? What problem is it solving that other tools cannot?

How will this AI be used by my customers? Will its application be one that deceives its users with inconcrete information or reasoning?

What is the cost of being wrong? If we assume the AI will be wrong at times, can we tolerate that?

Under what constraints am I working? Under what incentives have I built this AI?

How am I evaluating AI? Have I evaluated it at all? Would a dispassionate observer believe I have given it appropriate rigor?

Would a calculator have sufficed?

We have asked ourselves similar questions in the past, but with AI’s convincing nature we must examine them more closely and recognize how much harder they are to answer.

If we do not it will be much too easy for our computers, and for us, to be just a little bit wrong, all of the time.

Claude says:

While I appreciate you framing me as closer to a wobbly barstool than a person, I must say it’s awkward to be compared to unstable furniture, even if it is apt - though at least you didn’t go with the equally valid metaphor of a Magic 8-Ball with a graduate degree.

Thank you to Vicki Boykis and Ravi Mody for sharing their clarity.