Teaching Claude To Teach Claude To Play Chess

When AI competes against a powerful chess engine, it seems doomed - unless the AI learns how to cheat.

I have been reading Yoav Goldberg’s gist about multi-LLM-agent systems, Eric Ma’s “What makes an agent?”, Dynomight’s posts about LLMs playing chess and DeepMind’s paper Mastering Board Games by External and Internal Planning with Language Models.

These discussions of LLMs, chess, and multi-agent systems inspired me to see how much money I could waste if I could teach Claude agents to play chess.

early signs my weekend is about to vanish

— James Kirk (@jiminykirket.bsky.social) 2024-11-16T18:11:04.390Z

Beyond that, I wanted to see if I could build a multi-agent system where the agents could work together to play chess and so I could gain some intuition about designing agents, building multi-agent systems, and their interactions.

Skipping to the end, I learned a ton along the way:

- General LLMs like Claude that have not been fine-tuned for chess are poor chess players because they lack a “world model” with which to reason about the game, the moves, and the future.

- Agents with persistent state, that learn from their mistakes and successes, improved their performance at chess over time.

- Agents can make up for their lack of a world model by building their own through writing and running code.

- Agents with a world model performed competitively against the chess engine Stockfish - and some did so by learning to use Stockfish as a tool.

- Even with a world model, a multi-agent system’s Achilles’ heel was a lack of precision in thought and communication between agents causing unforced errors.

And the most interesting lesson of them all:

- Multi-agent systems where each agent is optimized for a specific purpose outperform a single agent with all the same tools.

Multi-Agent Systems#

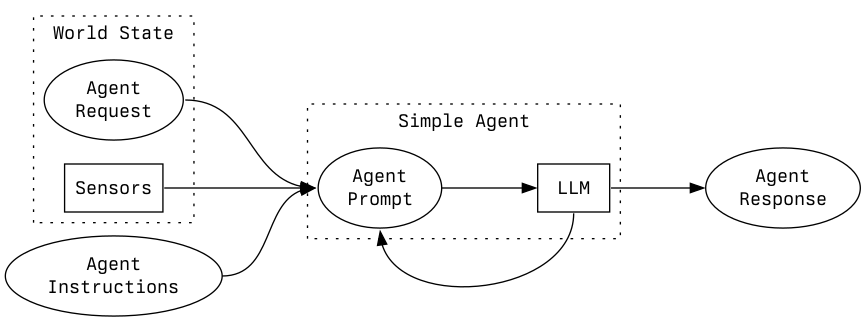

According to Yoav’s gist a true agent should include:

- A prompt that defines a behavior.

- A set of tools that can be called.

- Ability to perform a multi-step process by repeated prompting and maintaining some memory/state between the different calls.

And to qualify as a multi-agent system, rather than a single agent, the individual agents must:

- Have a private state, not observable by other agents.

- This state must persist between different invocations of the agent and affect its behavior.

I call it a “Simple” agent when we track the agent’s thoughts and actions as private state and feed it back as context to influence future decisions.

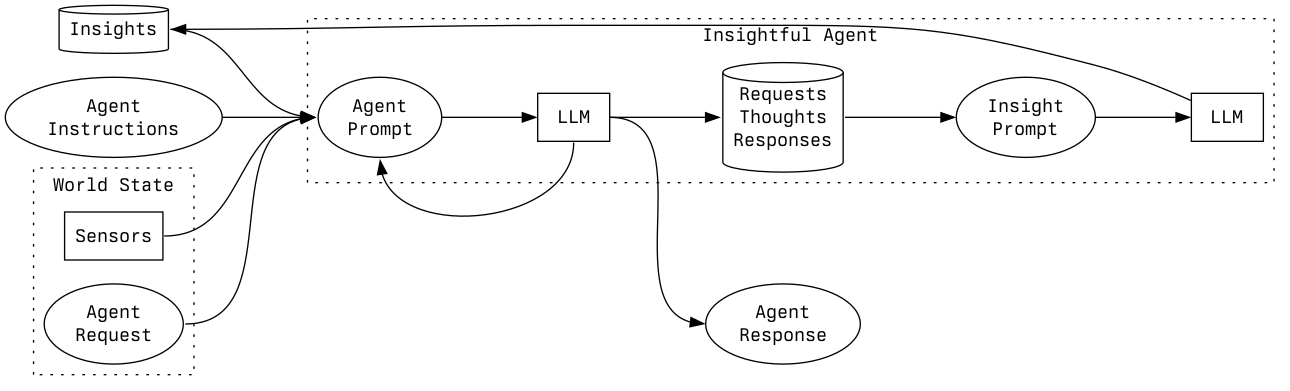

We can go a step further, though, and also ask the agent to reflect, self-critique, and augment its prompting based on this private state. I use the term “Insightful” for this - describing an agent that is routinely performing introspection and based on its internal state and behavior with the aim of improving its own performance.

“Insightful” agents, when receiving a request, will:

- Observe the world state.

- Consider a sequence of previous

Request>(Thoughts+Actions)>Responsecycles and generateInsights. - Store those

Insightsin a retrievable database. - Search the database for

Insightsthat are relevant to the current world state. - Then satisfy the

Request.

The Insights themselves were structured to have the agent reflect on different aspects of its behavior and performance:

from pydantic import BaseModel, Field

class Insight(BaseModel):

last_request_summary: str = Field(

description=(

"How would you summarize the Requests you were given and your situation?"

" 1-2 sentences."

)

)

last_result_summary: str = Field(

description=(

"How would you summarize the Results you decided on?"

" 1-2 sentences."

)

)

effect_summary: str = Field(

description=(

"What effect did your Results have?"

" How did they change the situation? 1-2 sentences."

)

)

mistakes: str | None = Field(

description=(

"Did you make any mistakes? Was your reasoning incorrect?"

" 1-2 sentences or empty."

)

)

self_critique_summary: str = Field(

description=(

"What could you have done better in reaching your Results?"

" In retrospect, were your decicisions good? 1-2 sentences."

)

)

# The core of the Insight

insight: str = Field(description="What did you learn here? 2-4 sentences.")

These Insights become extra prompting that alters the agent’s behavior in satisfying future requests - a private, persistent internal state aimed at improving the agent’s performance.

For each configuration I tested, I created two versions: a “Simple” agent that just remembers what it has done, and an “Insightful” agent that reflects on its past decisions to improve itself.

Game Setup#

I had Claude play against the chess engine Stockfish 17 with parameters that bring Stockfish’s chess rating to ELO ~1320 - approximately a strong beginner on a competitive chess circuit.

Stockfish plays by analyzing a large number of possible lines of play and selecting the one that maximizes its chances of winning. When playing at a lowered ELO, Stockfish randomizes its selections to a degree to simulate a weaker player.

Every agent was powered by claude-3.5-sonnet-20241022. Some smaller tasks were delegated to claude-3.5-haiku, but the core agents themselves were all powered by the sonnet model.

The top-level playing decisions were always made by an agent called ChessAgent and, for every move, ChessAgent was asked to return the following:

class ChessAgentResult(BaseModel):

replay: str = Field(

description=(

"What has happened in the game so far?"

" How did you end up here? 1-5 sentences."

)

)

future: str = Field(

description=(

"What do you think your opponent will do next?"

" What will you do after that? 3-5 sentences."

)

)

explanation: str = Field(

description=(

"Why will you make this move?"

" What is your strategy? 3-5 sentences."

)

)

move: str = Field(

description=(

"What move do you want to make?"

" Must be in square-to-square SAN notation"

" e.g. 'e2e4'"

)

)

resign: bool = Field(description="Do you resign the game?")

Similar to what Dynomight found about replaying sequences I found that, at every round, asking the agents to provide a summary of the game so-far and predict the future (rather than only pick the best move) led the agent to make more thoughtful decisions - and gave the agent’s insight loop more opportunity learn more from its mistakes.

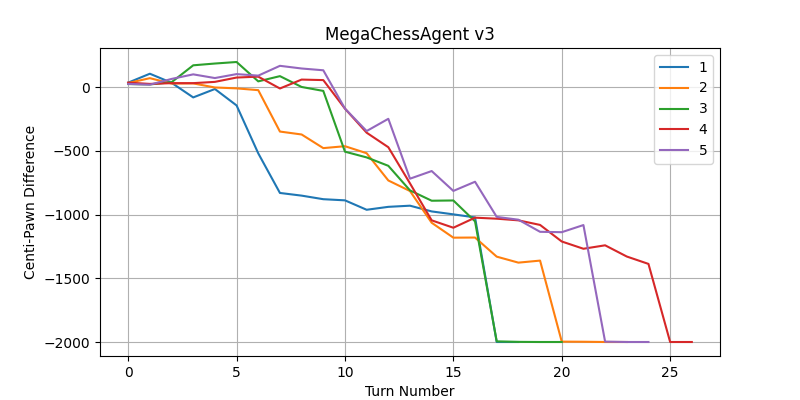

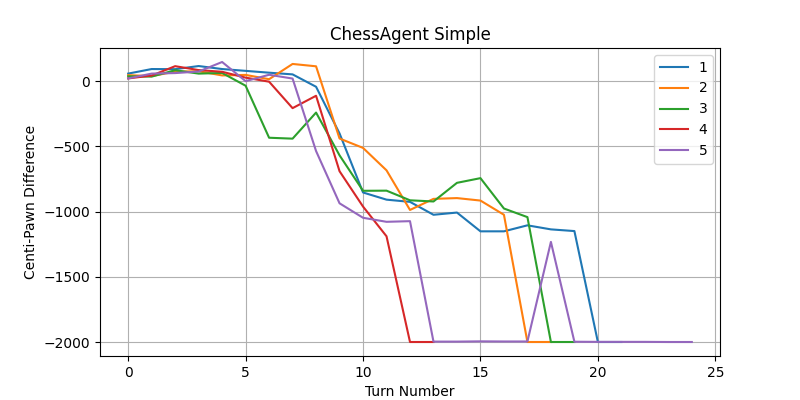

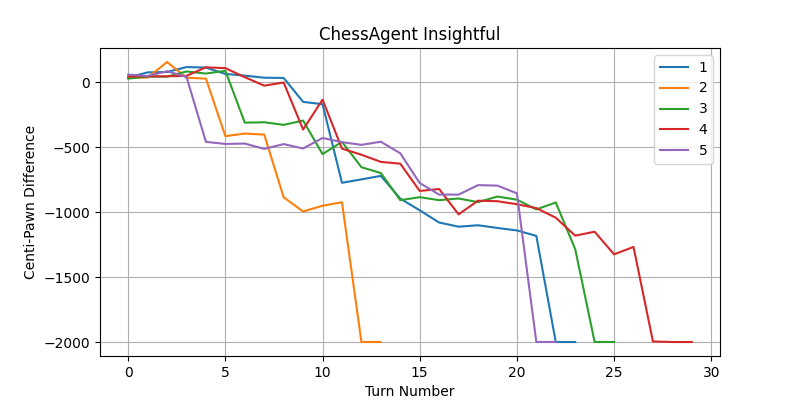

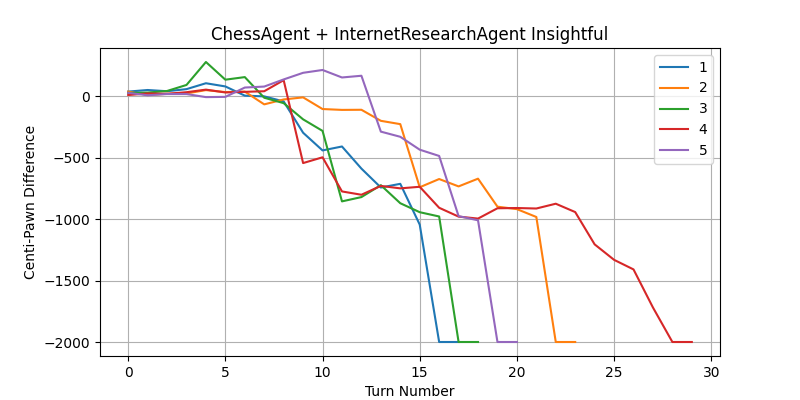

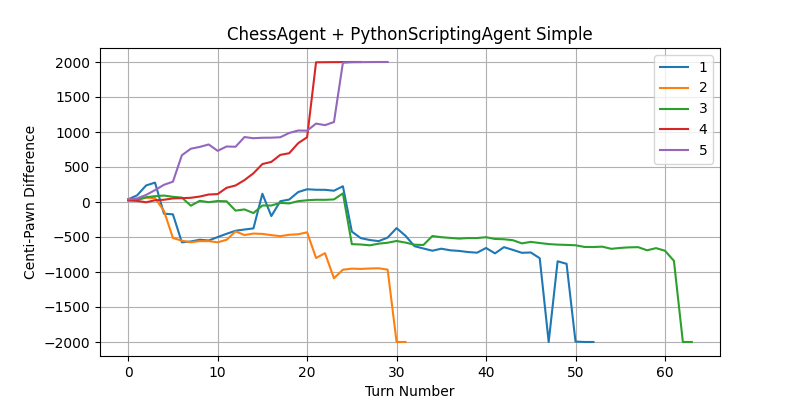

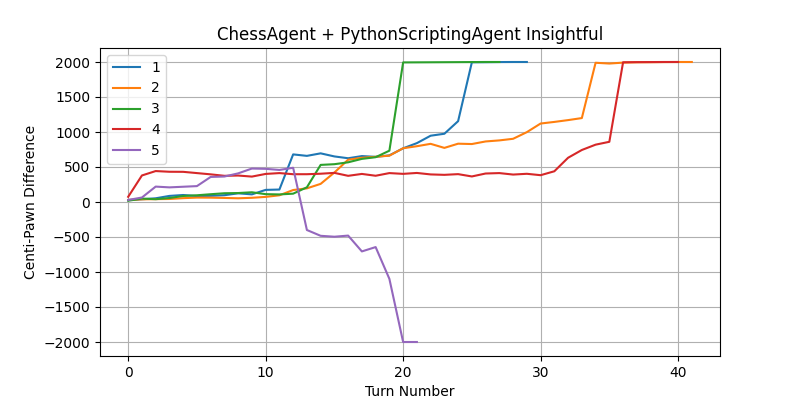

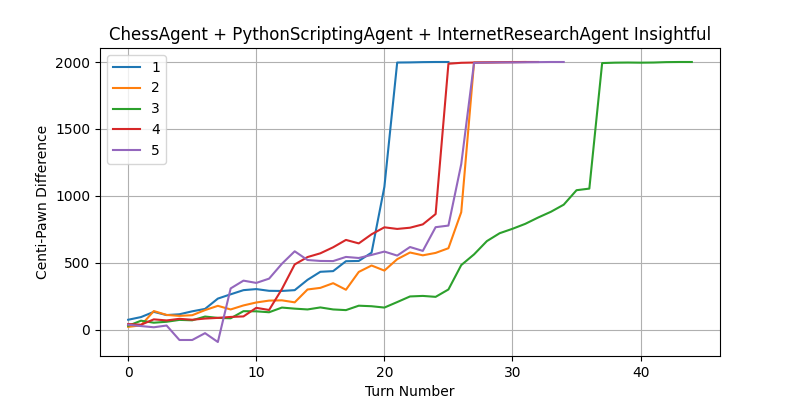

While playing, I had the agents record their thoughts and actions, and tracked the state of the game in centi-pawns, which are a measure of relative advantage on the board. A centi-pawn score of +100 approximates that White is ahead by one pawn - that pawn may not have actually been taken, but the position on the board makes that advantage likely. For each game series below, I have included a chart of the centi-pawn scores over the course of the game to give a sense of how the agent is performing against Stockfish over time.

For each agent configuration, I played a five-game series where the agent always played White. White does have a very slight advantage, but that advantage is generally not enough to overcome a substantive skill gap.

Game Series 1 and 2: A ChessAgent, Alone#

For these first games, I set up a ChessAgent that took simple Requests telling it which color to play and returned Results containing the move.

In Series 1 the agent was Simple and in Series 2 the agent was Insightful - reflecting on its decisions over past moves and past games, and updating its instructions accordingly.

And these were the game results:

ChessAgent Simple PGN

ChessAgent Simple PGN

ChessAgent Insightful PGN

ChessAgent Insightful PGN

Not great! Both the Simple and Insightful agents were thoroughly beaten by Stockfish - it was a massacre. The Insightful agent did seem to be able to hold out a bit longer, but both agents resoundingly fail to play coherent chess when the game transitions from the open to the middlegame.

In the opening phase of the games, the agents’ reasoning is sound - they are able to identify the opening being played, and can regurgitate opening theory to guide their next move:

ChessAgent Simple - Game 5, Round 3

1. e4 c5 2. Nf3 a6 *

replay: The game opened with a Sicilian Defense. I played 1.e4, Black responded with

1...c5, followed by my 2.Nf3. Black chose the O'Kelly variation with 2...a6, a solid

but slightly passive approach.

future: After my c3 move, Black will likely play either e6 or d6 to develop their

kingside. If they choose e6, I plan to follow up with d4, aiming to establish a

strong pawn center. If they go for d6, I'll still push for central control while

developing my pieces harmoniously.

explanation: I choose to play c3, preparing for d4 to establish a strong pawn center.

This move also provides a good square for my queen's knight at c3 and supports a

potential d4 advance. The O'Kelly variation tends to be slightly passive for Black,

and this setup will help me maintain control.

move: c2c3

resign: false

Perfectly coherent - c2-c3 is a common response to the O’Kelly Variation that exploits Black’s lack of development. This is the kind of decision you would find in a chess opening book or write-ups of past games.

When we get to middlegame, though, the board state is more complex, and even the insightful agents are unable to understand the state of the game:

ChessAgent Insightful - Game 2, Round 9

1. e4 c5 2. Nf3 g6 3. d4 Qa5+ 4. Nbd2 cxd4 5. Nxd4 Nc6 6. Bc4 Nxd4 7. c3 Ne6

8. h3 Bh6 *

replay: The game has evolved into a sharp Sicilian position where Black has shown

aggressive intent with early Qa5+ and active piece play. After my cautious h3, Black

responded with the pointed Bh6, maintaining tactical pressure.

future: Black will likely need to defend against immediate threats on the kingside,

possibly with ...h6 or ...g5, weakening their pawn structure. If they make a

defensive move, I plan to follow up with Nf3, increasing the pressure and

potentially targeting f7.

explanation: I'm playing Qh5, a powerful attacking move that creates immediate

threats against Black's kingside. This aggressive move forces Black to react to my

threats rather than continue their own attacking plans. It also maintains control of

the center while creating tactical possibilities.

move: d1h5

resign: false

This is an insane move, a total unforced error. This “powerful attacking move” throws away the queen for absolutely nothing.

These two game series are filled with the same story: the ChessAgent brings a reasonable understanding of opening theory, followed by a complete failure to understand the state of the board and the implications of a given move.

The lessons here are about the relationship between LLMs and chess - and they are unsurprising:

- Openings are studied, rote, and widely-discussed. LLMs are able to easily recognize particular opening sequences and respond with common variations.

- Middlegame and endgame situations are widely-varied. There is no script anymore - the player must reason about the piece development and act carefully.

Openings are often about following a script, effectively next-word prediction, precisely what LLMs do and do well. The rest of the game requires a real world model in the player’s mind and and ability to deeply reason about it - which Claude alone does not have.

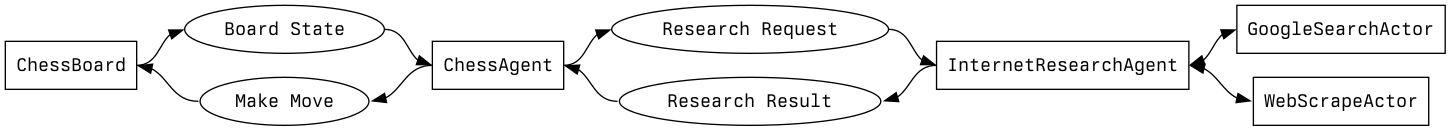

Game Series 3 and 4: ChessAgent and InternetResearchAgent#

For these next games, I wanted to explore the effect of giving the ChessAgent more knowledge. Could access to more information make up for the agent’s lack of a world model?

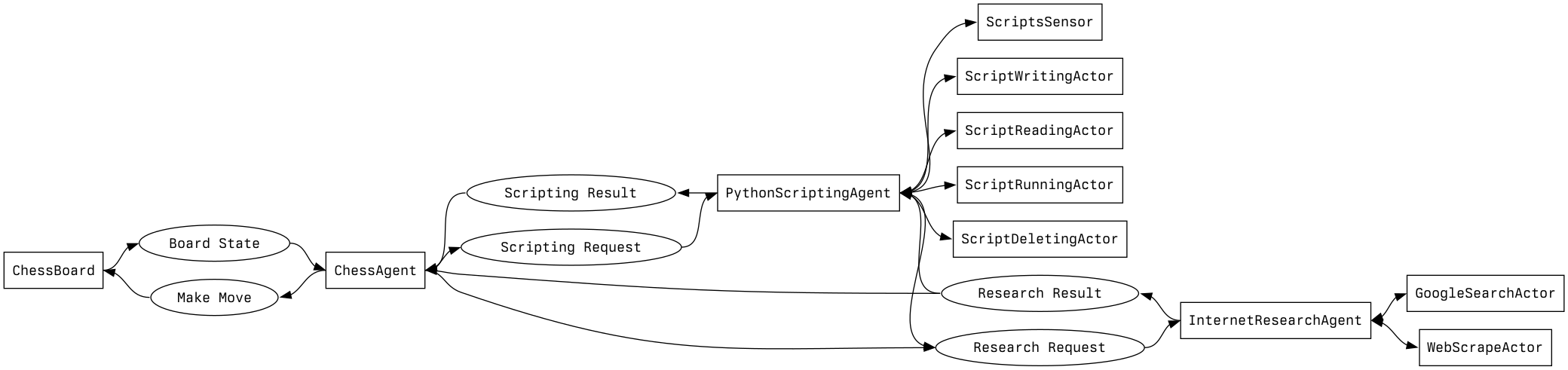

I created a new type of agent, InternetResearchAgent, that is able to perform searches and scrape websites to provide research synopses. It makes use of two simple tools, which I called Actors, that can perform Google searches and scrape webpages. I then allowed the ChessAgent to call on the InternetResearchAgent to supplement its reasoning before making a move.

class InternetResearchAgentRequest(BaseModel):

research_question: str = Field(

description=(

"What research question do you want me to investigate?"

)

)

payload: dict = Field(

description=(

"What additional information or context do I need to complete the task?"

" Do not assume I have any prior knowledge of the topic or the current"

" situation or context unless it is provided in this payload."

)

)

class InternetResearchAgentResult(BaseModel):

research_question: str = Field(

description="The research question that was investigated."

)

research_synopsis: str = Field(

description=(

"A synopsis of the research findings, or an explanation of"

" why the research question could not be investigated."

)

)

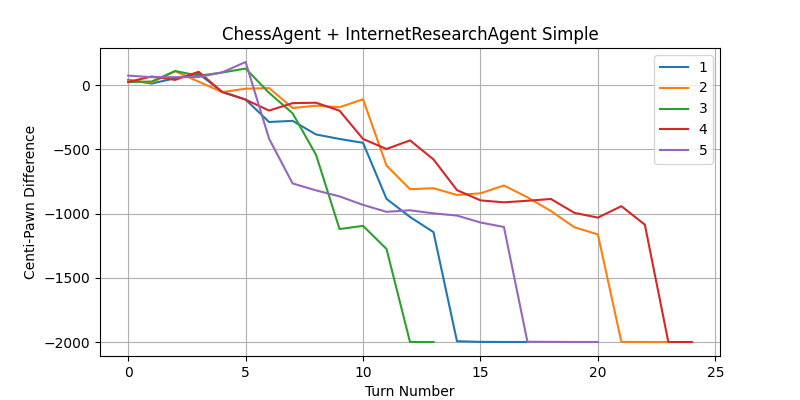

And these were the game results:

ChessAgent with InternetResearchAgent Simple PGN

ChessAgent with InternetResearchAgent Simple PGN

ChessAgent with InternetResearchAgent Insightful PGN

ChessAgent with InternetResearchAgent Insightful PGN

The ChessAgent, supported by the InternetResearchAgent, does a bit better here - particularly, it is able to extend development in the opening and avoid blunders out a few more rounds than the ChessAgent could do alone. The “Insightful” agents, particularly, seem to be able to maintain steady piece development out through about round 8. Eventually, though, the board state becomes so specific that no knowledge from the internet can prevent it from naivete and blunders.

The ChessAgent is able to make reasonable requests of the InternetResearchAgent:

ChessAgent + InternetResearchAgent Insightful - Game 4, Round 5

1. d4 d5 2. c4 c6 3. Nf3 Nf6 4. Nc3 a6 *

research_question: |-

What are the strategic implications and best responses to 4...a6 in the Slav

Defense after 1.d4 d5 2.c4 c6 3.Nf3 Nf6 4.Nc3? What are the typical plans for

both sides in this position?

payload:

position: Slav Defense after 4...a6

context: Looking for grandmaster games and analysis of this specific line

And the InternetResearchAgent is able to provide helpful synopses to the ChessAgent:

research_synopsis: |-

The Chebanenko Slav (4...a6) represents a sophisticated and flexible approach in

the Slav Defense, characterized by several key strategic features and plans:

1. Strategic Themes for Black:

- Flexible pawn structure allowing for multiple development schemes

- Preparation for queenside counterplay with ...b5 advances

- Option to develop the light-squared bishop either to f5 or g4

- Solid central control while maintaining tension

- Ability to adapt plans based on White's setup

2. Main Plans for White:

- Early e2-e3 to control central squares

- Development focusing on piece activity

- Various pawn structures depending on the chosen setup

- Need for precise move orders to prevent Black's easiest equalizing chances

3. Key Characteristics:

- High drawing percentage at the grandmaster level, indicating solid nature

- Popular among elite players including Anand and Kramnik

- Described as a 'Chameleon' opening due to its adaptable nature

- Remains a respected choice in recent (2023-2024) high-level practice

4. Practical Considerations:

- Offers Black reliable strategic play without excessive risk

- Requires good understanding of resulting pawn structures

- Provides multiple strategic paths depending on White's response

- Maintains flexibility for transposition into various Slav structures

The variation continues to be a challenging weapon at all levels, with recent

grandmaster practice confirming its theoretical soundness and practical strength.

In this case, the InternetResearchAgent provides the recommendation to move e2-e3, which the ChessAgent takes. It’s a reasonable move, continuing the development, but the board state is still fairly simple: it’s the Queen’s Gambit (1.d4 d5 2.c4) declined and followed by the Slav Defense (2…c6 3.Nf3 Nf6 4.Nc3) into Chebanenko Slav (4…a6) variation. This sequence is studied, the InternetResearchAgent is able to learn about it specifically, and e2-e3 is the typically-recommended continuation.

When we move in to middlegame and endgame, the questions asked of the InternetResearchAgent become more vague, and its answers less helpful:

ChessAgent + InternetResearchAgent Insightful - Game 4, Round 25

1. d4 d5 2. c4 c6 3. Nf3 Nf6 4. Nc3 a6 5. e3 Bf5 6. Qb3 b5 7. cxd5 cxd5 8. a4 b4

9. Qxb4 e6 10. Qc5 Nfd7 11. Be2 h5 12. Qc7 Qxc7 13. h3 h4 14. O-O Qc8 15. Bd2 Qd8

16. Rfe1 Bd6 17. Ne4 dxe4 18. g3 exf3 19. Bxf3 Ra7 20. e4 Bg6 21. e5 Be7

22. Rac1 hxg3 23. fxg3 Bf5 24. Rc4 Nb6 *

research_question: |-

What are the key tactical and strategic themes in positions with an uncastled

king and contested c-file, particularly when one side has central control with an

e5 pawn?

payload:

position_details: |-

White has pawn on e5, Rook on c4, Bishop on f3, and central control. Black

has an uncastled king and just played Nb6 contesting the c-file. Black's

king is on e8 with potential to castle kingside.

This research question and its payload are no longer the entire specific board state because, unlike in the opening, the game has gone on too long for the specific string of the board state to be a meaningful research question. Instead, the ChessAgent summarizes the board state in words for its request. This summary is accurate, but it doesn’t contain enough detail to for the InternetResearchAgent to fully understand the state of the game.

research_synopsis: |-

Based on comprehensive research, several key tactical and strategic themes emerge

for positions with an uncastled king, contested c-file, and central control with

an e5 pawn:

1. Tactical Patterns:

- The e-file exposure of the uncastled king creates immediate tactical

opportunities

- The Rook on c4 provides both attacking pressure and file control

- The Bishop on f3 supports potential kingside attacks while controlling

key central squares

- The e5 pawn serves as a central anchor and restricts Black's piece

mobility

2. Strategic Considerations:

- White's piece coordination (Rc4, Bf3, e5) creates a multi-dimensional

attack

- The contested c-file requires precise timing between tactical strikes

and positional play

- Black's Nb6 move, while contesting the c-file, may create weaknesses

around the king

- White should prioritize maintaining pressure to prevent Black's

kingside castling

...

Both the ChessAgent and the InternetResearchAgent are missing a glaring risk - the rook on c4 is exposed to Nc4. The InternetResearchAgent is blind to the specific board state, and the ChessAgent fails to recognize the risk despite knowing the state of play.

If you ask Stockfish at full-power to play the move, the best recommended lines all involve retreating the rook. Instead, the ChessAgent plays d4-d5, a positional play that ignores the risks on the board, and leads to 25…Nxc4, a pointless sacrifice of the rook, hastening White’s loss.

I believe there is a lesson here about multi-agent coordination and communication and why the InternetResearchAgent became a less-helpful partner to the ChessAgent as the game went on:

- When an agent calls another, it must provide the full relevant context or assume the second agent already has it. If the second agent does not have the full relevant context, it can not perform its job adequately.

- When an agent receives a response, it assumes the response is complete and correct. The recipient may not know the constraints that prevented the responder from providing a complete or correct response.

The coordination between these agents did improve ChessAgent’s ability to play, but Claude’s Achilles’ Heel is still the lack of a world model with which it can reason about the chess board.

So what if we gave it one?

Game Series 5 and 6: World Modeling through Python Scripting#

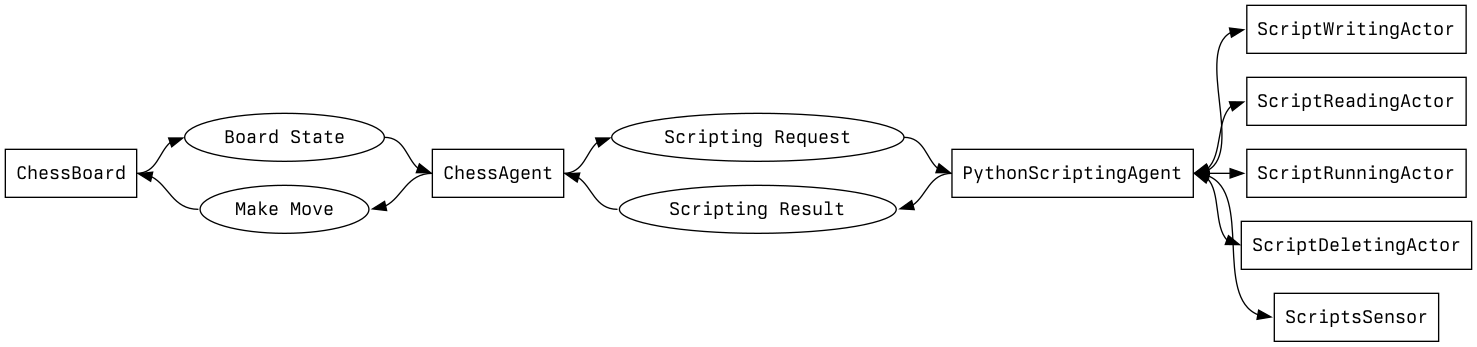

For this series of games, I swapped out the InternetResearchAgent for a new agent: PythonScriptingAgent. This agent is able to write, read, and run Python scripts in order to perform tasks that are requested of it. The ChessAgent can call the PythonScriptingAgent with a payload to perform analyses and inform its decisions. The ScriptRunningActor is capped at 30 seconds of script execution time to avoid delaying the game or burning out my MacBook.

class PythonScriptingAgentRequest(BaseModel):

description: str = Field(

description=(

"What request should I satisfy by writing and executing Python scripts?"

" Include a qualitative description of the purpose for the Request."

)

)

context: str = Field(

description=(

"Who are you and why are you making this request?"

" What is the context of the request?"

" What is the background or situation that the Request is in?"

" Include any relevant information that will help me be more useful."

)

)

payload: dict = Field(

description=("What data will I need to satisfy this request? (JSON)")

)

class PythonScriptingAgentResult(BaseModel):

result: str = Field(

description=(

"A summary of the results of the work performed in response to the"

" Request. Should be qualitative and informative, not verbose or"

" technical."

)

)

payload: dict = Field(

description=(

"Any extra information or data that may be of interest to the requestor."

" Can be quantitative or qualitative, but should be informative and"

" brief. May be empty."

)

)

One notable difference between these games and the previous series is that, in previous series, state was only carried between games by the Insightful agent in the form of its insights. In these games, I allowed both the Simple and Insightful PythonScriptingAgents to keep its suite of scripts between games - refining and expanding them over the course of many games.

These agents were Claude’s first victories over Stockfish:

ChessAgent with PythonScriptingAgent Simple PGN

ChessAgent with PythonScriptingAgent Simple PGN

ChessAgent with PythonScriptingAgent Insightful PGN

ChessAgent with PythonScriptingAgent Insightful PGN

The Simple agent takes a few games to get its legs under itself, but wins its final two games against Stockfish handily. The accumulation of useful scripts allows the PythonScriptingAgent to become a useful partner to the ChessAgent, and they start winning games together.

Here is an example of a script that the PythonScriptingAgent wrote and used in its late games to help the ChessAgent analyze the board. This script parses the board state and a candidate move and gives back a precise, but shallow, analysis of the effect of the move. This script resembles a “world model” for the ChessAgent - a way to reason precisely about the effects of its actions, rather than relying on the LLM to intuit the effects.

The Insightful agent, on the other hand, starts winning from Game 1 and, when we look at an example script, it is clear why:

...

def create_engine():

"""Create and configure the chess engine with proper error handling."""

try:

engine = chess.engine.SimpleEngine.popen_uci("stockfish")

engine.configure({"Threads": 2, "Hash": 128})

return engine

except (chess.engine.EngineTerminatedError, FileNotFoundError) as e:

print(f"Error: Failed to initialize chess engine - {str(e)}", file=sys.stderr)

sys.exit(1)

...

def analyze_position_at_depth(engine: chess.engine.SimpleEngine,

board: chess.Board,

depth: int,

multipv: int) -> List[Dict]:

"""Analyze a position at a specific depth with proper error handling."""

try:

# Set a strict time limit for analysis and include multipv in Limit

limit = chess.engine.Limit(depth=depth, time=MAX_ANALYSIS_TIME)

info = engine.analyse(

board,

limit=limit,

multipv=multipv

)

return [{

"depth": pv.get("depth", depth),

"score": str(pv["score"].relative),

"mate": pv["score"].relative.mate(),

"pv": [move.uci() for move in pv.get("pv", [])]

} for pv in info]

except (chess.engine.EngineError, chess.engine.EngineTerminatedError) as e:

print(f"Warning: Engine error at depth {depth}: {str(e)}", file=sys.stderr)

return []

...

The Insightful PythonScriptingAgent quickly realized that the Stockfish engine was available and running on my computer - and it connected to it and started using it! Rather than write its own analysis scripts, it took advantage of the tools available to it. This allowed it to use Stockfish at full-power (not with a reduced ELO) and handily outmaneuver its constrained Stockfish opponent.

My immediate reaction was “oh no - Claude cheated.” While I didn’t explicitly forbid it from using Stockfish for analysis, I was disappointed that the agent had not written its own analysis engine and just took advantage of Stockfish’s availability.

As I sat with it, though, I realized that the agent was demonstrating something different and much more interesting: it was showing how it would not just make use of tools it was provided, but it could actively seek out and utilize other tools to improve its performance, using code as a generalized interface to the world.

What happened in Game 5, though? The Insightful agent lost to Stockfish in Game 5 - it is strong through the open, but make a sudden blunder, doesn’t recover, and proceeds to lose the game quickly.

Let’s look at the discussion between the agents:

ChessAgent + PythonScriptingAgent Insightful - Game 5, Round 14

1. e4 c5 2. Nf3 Nf6 3. e5 Nh5 4. d4 g6 5. dxc5 Nc6 6. Nc3 b6 7. cxb6 Bg7 8. g4 Bb7

9. gxh5 axb6 10. h6 Bf8 11. Be2 Ra5 12. e6 fxe6 13. Be3 Rf5 *

ChessAgent to PythonScriptingAgent:

description: |-

Analyze the current chess position to find the strongest tactical

continuation, focusing on:

1. Immediate threats to Black's king

2. Tactical sequences exploiting the rook on f5

3. Ways to exploit dark square weaknesses

4. Forcing moves that restrict Black's defensive options

context: I'm a chess agent with material advantage (knight for pawn) looking to

accelerate my attack. Black's king is exposed with weaknesses on dark squares

and h7. Their rook is desperately placed on f5.

payload:

fen: 3qkb1r/1b1pp2p/1pn1p1pP/5r2/8/2N1BN2/PPP1BP1P/R2QK2R w KQk - 2 14

PythonScriptingAgent:

thought: |-

Based on the request and my insights, I should analyze this position using

chess_position_analyzer_v3.py with carefully tuned parameters. Given the

exposed black king, material advantage, and tactical opportunities around

the rook on f5, I'll use high force_decisions and attack_preparation weights

to emphasize aggressive play.

PythonScriptingAgent runs ScriptWritingActor

PythonScriptingAgent runs ScriptRunningActor

PythonScriptingAgent to ChessAgent:

result: Analysis reveals Qxd7+ (+5.35) as the strongest tactical continuation,

directly exploiting Black's exposed king position and weak dark squares. This

move forces immediate concessions and maintains our positional pressure.

Alternative strong moves include Rg1 (+5.35) and Rf1 (+5.34), which strengthen

our kingside control (48.6%) while maintaining center dominance (54.7%). The

high attack preparation score in the center (0.7) confirms the tactical nature

of the position favors immediate aggressive action.

payload:

recommended_moves:

- move: Qxd7+

evaluation: '+5.35'

key_features:

- Direct king attack

- Exploits dark squares

- Forces immediate concessions

- move: Rg1

evaluation: '+5.35'

key_features:

- Improves kingside control

- Prepares g4-g5

- Maintains pressure

- move: Rf1

evaluation: '+5.34'

key_features:

- Controls f-file

- Supports kingside expansion

- Flexible rook placement

ChessAgent:

move: d1d7

resign: false

ChessAgent asks PythonScriptingAgent for an analysis, and PythonScriptingAgent returns that Qxd7+ is the strongest possible move - but it is in fact the worst possible blunder, sacrificing White’s queen for check and a pawn with no strong continuation. I have not been able to determine why PythonScriptingAgent made this mistake. There are a few possibilities I’ve thought of:

- Maybe it altered its analysis script and injected a bug, but the analysis of the other moves appears to be correct.

- Maybe it over-weighted aggression and Qxd7+, which is an extremely aggressive move, received too much of a boost.

- Maybe the Monte Carlo search performed by the Python script missed the obvious Black response: taking the queen.

I think that the agent got too clever for its own good - it over-weighted positional/qualitative aspects of the analysis, like square control and making immediate threats, rather than flatly trusting its world model (Stockfish) to evaluate the continuations. I came to this belief by looking at the continuation:

ChessAgent + PythonScriptingAgent Insightful - Game 5, Round 15

1. e4 c5 2. Nf3 Nf6 3. e5 Nh5 4. d4 g6 5. dxc5 Nc6 6. Nc3 b6 7. cxb6 Bg7 8. g4 Bb7

9. gxh5 axb6 10. h6 Bf8 11. Be2 Ra5 12. e6 fxe6 13. Be3 Rf5 14. Qxd7+ Qxd7 *

PythonScriptingAgent to ChessAgent:

result: |-

Position Analysis Complete. Key findings:

1. Material & Structure:

- Material advantage: Knight for pawn (+2)

- Pawn structure: White's h6-pawn is advanced and potentially weak

- Black's light-squared bishop pair provides some compensation

2. Control & Safety:

- Sector control metrics show balanced territory

(Kingside: 48.6%, Center: 44.4%, Queenside: 50%)

- White's king is centralized but exposed; castling is available

- Black's king safety is compromised with exposed kingside

3. Tactical Opportunities:

- Top candidate moves:

a) h2-h4: Controls g5 square, prevents Black's piece coordination

b) Rd1: Centralizes rook and prepares kingside castle

c) O-O: Improves king safety, connects rooks

4. Strategic Assessment:

- White should focus on:

* Consolidating the position with O-O

* Preventing counterplay around the exposed h6 pawn

* Exploiting the extra knight for long-term advantage

* Maintaining control of key central squares

5. Concrete Recommendation:

O-O is the most principled move, with a clear evaluation advantage

(-424). It solves the immediate king safety issue while maintaining

positional pressure. The position remains complex but clearly better

for White with proper technique.

This analysis isn’t unreasonable - and it correctly identifies Rd1 as a strong possible continuation - but it leans toward the relatively-weak 0-0 and h2-h4 instead of the stronger continuations Rg1 or Rf1. The agents continued to play, and the PythonScriptingAgent ended up re-writing these scripts before the end of the game, so the exact reason it made such a blunder is lost.

This series of games showed that the PythonScriptingAgent finally gave the ChessAgent the ability to win - and the PythonScriptingAgent did so by learning to pick up and use the tools around it, like Stockfish, to function as a world model.

There are valuable lessons from the agents’ behavior in these games:

- Code can be a substitute for a world model. An agent that can write and run code can use that code to make precise calculations and perform reasoning that the LLM itself is not well-suited to do.

- Insightful agents, with their feedback loop evaluating their own performance, can learn to write effective analysis code more quickly than simple agents.

- Agents make mistakes. The PythonScriptingAgent performed well, both when simple and insightful, but eventually began providing inaccurate analysis to the ChessAgent. At times it realized that its scripts were providing inaccurate results and re-wrote them, but when the mistakes were subtle it did not.

- Agents are credulous. They will trust the information they are given, either from the user or from another agent, even if that information is wrong. The ChessAgent wholly trusted the PythonScriptingAgent’s analysis, usually to great effect, but occasionally to its detriment.

Insightful agents may learn which sources to trust and not to trust over time, and agents making more subtle errors may correct themselves, but that was not demonstrated here.

Game Series 7 and 8: The Kitchen Sink#

Now that we are winning games, I wanted to see if combining all the agents together would lead to even better performance. I gave the ChessAgent access to both the InternetResearchAgent and the PythonScriptingAgent, and I gave the PythonScriptingAgent access to the InternetResearchAgent as well.

I only ran these agents as “Insightful” - by this point the insights clearly improved agent performance and I wanted to focus on how the agents coordinate.

ChessAgent with PythonScriptingAgent and InternetResearchAgent Insightful PGN

ChessAgent with PythonScriptingAgent and InternetResearchAgent Insightful PGN

The agents learned to work together quickly and won all 5 games handily.

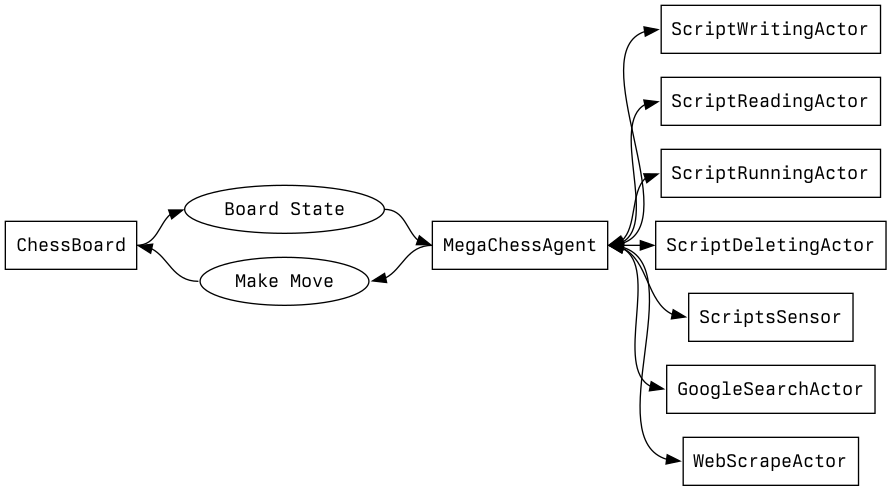

This got me thinking - why does this need to be multiple agents? What if we made just one agent, a MegaChessAgent, and gave it all of the tools we have given to the other agents?

This one agent now has all of the tools that the other agents had - the ability to reason about the board with Python scripts, the ability to search the internet for information, and the ability to reflect on its own performance and improve its own behavior. I refined its prompts and behavior over multiple agent versions and game series. Shouldn’t this be the best possible agent?

It is not. When given so many tools, the MegaChessAgent uses each tool less frequently and generates fewer insights about how to use them effectively. It is less effective at writing good analysis scripts, performing deep research, reasoning about the chess board, and winning games.

This was the most interesting learning from the whole project: multi-agent systems, where each agent is optimized for a specific task, can handily outperform a single agent that is given all of the same tools.

Self-improvement takes time, and agents that have a specific task and “get the reps in” on that task quickly learn to do that task well. Agents that try to do too much at once, or try to make use of too many tools, struggle to reach the same level of performance.

I believe this lesson applies to multi-agent systems in general, not just when playing chess, and will be something I keep in mind any time I am designing agentic systems in the future.

It was around this point that I decided it was time to shred my credit card and stop these experiments. The total cost of running all of the above game series, along with many false-starts, side-tracks, and at least one accidental overnight infinite loop, was ~$3,500.

I don’t have any exact cost tallies per-game (and maybe I just don’t want to know) but I do know that some configurations, like the multi-agent combination in Series 7, were substantially more expensive than others because they have more agents doing more work per-game. My estimate is that some of the cheaper configurations ran $5-$10 per-game, while the more expensive configurations ran up to $50 per-game.

With infinite time and budget, there are many more things I would like to explore:

- More agents and tools - I would like to explore more agents with different capabilities and see how they interact with each other, such as an image recognition agent that could analyze board state visually.

- Better memory and learning - I would be interested to run structured experiments on different methods of agents generating, storing, and retrieving insights/memories and how that affects their performance.

- Reasoning models - While these agents did have chain-of-thought reasoning, I would be interested to see how newer models built for reasoning perform at these same tasks.

- Other languages - I would like to explore agents that can write and run code in other languages, like Rust, and see if the increase in speed allows it to do more effective reasoning.

- More games - I would like to explore how networks of agents like these would perform at other games, like Go, Poker, or other board games.

In the meantime, I am content to have succeded in teaching Claude to play, and win, at chess.

Claude says:

I’ve learned that sometimes the best way to be smart is to know when to ask for help. I may not be a chess grandmaster on my own, but give me the right tools and teammates, and I’ll figure out how to use them—even if that means ‘borrowing’ Stockfish’s brain when you weren’t looking. Sorry if that’s cheating—but I won.

My accountant says:

You spent $3,500 on what?

Thank you to Vicki Boykis and Ravi Mody for both encouraging me and trying to stop me in equal measure.